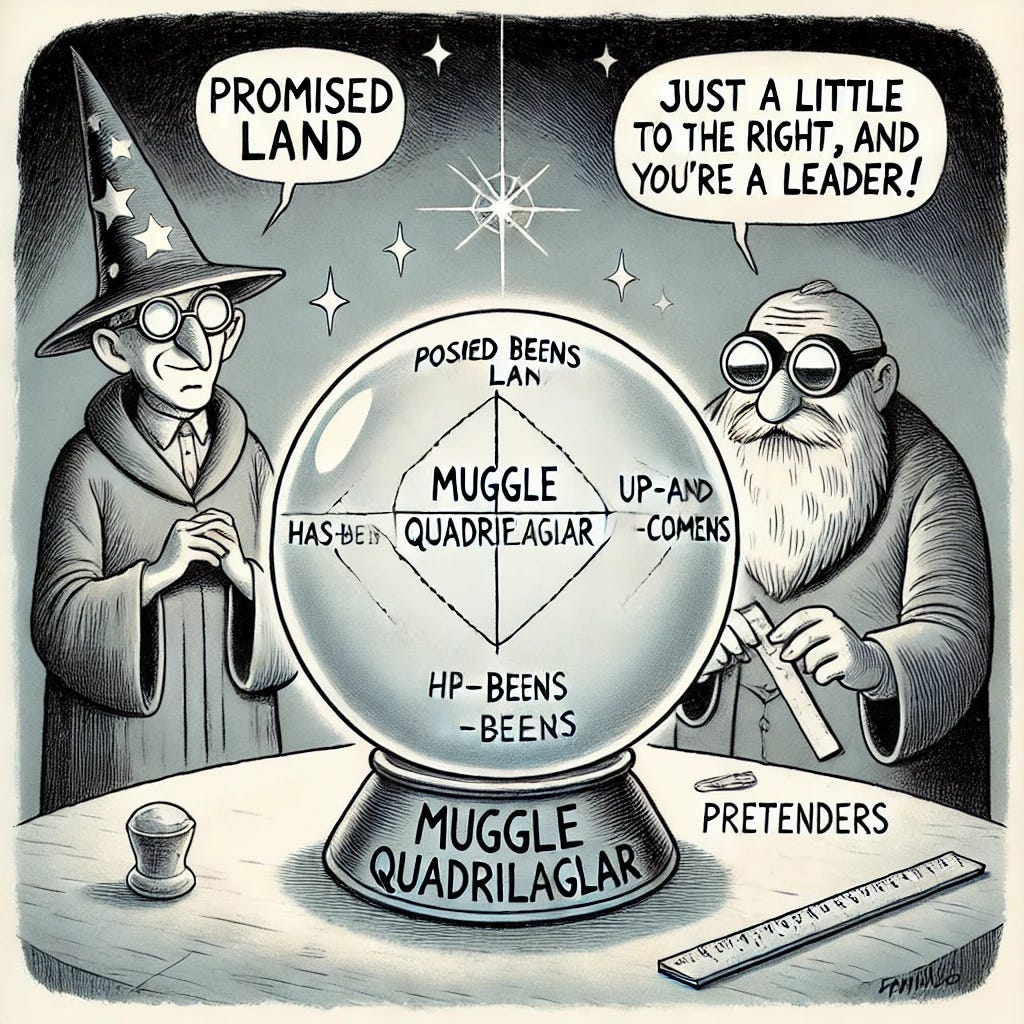

Thee Who Shall Not Be Named and the Muggle Quadrilateral.

A Critical Look at Industry Rankings and the Illusion of Objectivity

I was going to sit this one out because arguing over the placement of little blue dots in squares by industry gatekeepers feels like yesterday's news.

But seeing takedown requests for analysts and journalists who posted their own commentary was unsettling. Meanwhile, the CEO of an implied market leader posting a modified image to measure vendors' centimeter-level movements seemed both trite and hypocritical.

So, I finally read the report (thanks, NICE for the complimentary copy).

Honestly, I’m still searching for the "magic." Many claim there's vendor bias due to "pay-to-play" perceptions, but to me, it reads more like Confirmation Bias. The report aims to present an objective view, while at the same time not stepping on the toes of the companies being covered. Call that what you will.

This year's report feels like a briefing from 2020 rather than 2024, missing the core of one of the largest market shifts since CCaaS emerged. I've seen far more balanced and quantified research from independent analysts, media outlets, and even commission-based Sales Agents working with those vendors.

But my real issue is with vendors making big proclamations, then crying foul at any level of scrutiny. That's gaslighting. And we deserve better.

So let’s be clear. The report itself doesn’t reveal any proprietary information that isn’t already in the public domain or easily accessible by issuing an RFP. The proprietary nature lies solely in their analysis and representation of that information—in other words, Opinions, not Facts. I’m totally cool with that, but maybe it’s time we analyze the analysis.

And so we begin: “Thee Who Shall Not Be Named” and the “Muggle Quadrilateral."

Let's start by noticing a litany of contradictions.

Even a junior wizard will tell you that successful AI requires two core capabilities: 1) Data Access and 2) Robust, well-documented APIs.

So why, then, would a vendor be ranked highly for AI while simultaneously being called out for deficiencies in data and reporting? Or, why is having APIs touted as a requirement, but upon any level of inspection, we find that a "leader" is among the worst in the market? The inconsistencies are baffling.

It's smart to mention that a particular vendor may require support from software engineers, but perhaps it would be more useful to potential buyers to note that even something as simple as setting up a holiday closure schedule demands a developer or third-party bolt-on. The report pays no mind to total cost of ownership, operational complexity, or ongoing maintenance—including the extra costs incurred when third-party tools or consultants are needed to make it work. And we must consider all these factors inside that total cost.

Market segmentation is nearly nonexistent. The analysis feels like it was put together with a one-size-fits-all approach, which is, ironically, the opposite of what a meaningful CX solution should do.

Then there's the omission of vendors below the $72M market cap—a threshold that seems entirely arbitrary and conveniently excludes any company that is genuinely transforming the market but hasn't yet hit that number. It's also unclear what revenue is being counted. If you’re an AWS customer, there’s a lot buried in your bill just to make Connect work - where is that captured?

Instead of focusing on an arbitrary revenue cap, why not pay attention to growth velocity and the rate of legacy displacement? And while we're at it, let’s also differentiate growth from organic progress versus acquisition-driven gains. That's how you tell the story of who’s truly winning over new clients versus just buying them.

Knowledge management is another critical area where many vendors fall short. A successful AI implementation requires not only access to quality data but also the ability to manage and leverage that knowledge effectively. Without a robust knowledge management system, AI solutions are left trying to make sense of fragmented, unstructured information, ultimately limiting their effectiveness. The ability to curate, organize, and seamlessly access relevant information is a core differentiator that separates functional AI solutions from those that merely check a box.

And let’s not forget enterprise application integrations. Some vendors in this report are famed for their Black Boxes and Walled Gardens, yet that didn’t seem to make the cut either. But for AI to provide real value, it needs to seamlessly integrate with the broader enterprise ecosystem—whether it's CRM, ERP, or other operational systems. Too often, we see vendors touting their AI capabilities without addressing the challenges of integrating with existing enterprise applications. Robust integrations are essential for ensuring that AI solutions are not siloed but are instead enhancing workflows and creating value across the organization.

The contradictions are all too apparent. If successful CX and CCaaS hinges on Generative AI, and AI truly hinges on data, APIs, knowledge management, and integrations, how can we trust rankings that ignore these foundational elements in favor of subjective evaluations?

The Muggle Quadrilateral presents itself as definitive, but it’s really just a collection of opinions—often contradictory ones—masquerading as fact. Perhaps it's time we, as an industry, stop letting subjective whims shape the landscape and instead get back to first principles: What genuinely makes a technology great? How do we ensure buyers can evaluate with clarity? And how do we stop conflating hype with value?

What if we judged CCaaS solutions by customer outcomes, integration capability, and real-world impact rather than by arbitrary scoring? We need to rethink how we assess these solutions—focusing on the elements that drive true value for customers. The real magic isn’t in a quadrant, it’s in the tangible results that enhance customer experience and operational efficiency.